728x90

CNN_MNIST

from tensorflow import keras

import matplotlib.pyplot as plt

import numpy as np

from sklearn.model_selection import train_test_split

# mnist 데이터셋 로드

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

x_train.shape, x_test.shape

((60000, 28, 28), (10000, 28, 28))

plt.imshow(x_train[0], cmap = "gray_r")

plt.show()

y_train[0]

5

np.unique(y_train, return_counts = True)

(array([0, 1, 2, 3, 4, 5, 6, 7, 8, 9], dtype=uint8),

array([5923, 6742, 5958, 6131, 5842, 5421, 5918, 6265, 5851, 5949],

dtype=int64))

np.min(x_test), np.max(x_test)

(0, 255)

x_train.shape

(60000, 28, 28)

scaled_train = x_train.reshape(-1, 28, 28, 1) / 255

scaled_test = x_test.reshape(-1, 28, 28, 1) / 255

scaled_train, scaled_val, y_train, y_val = train_test_split(scaled_train, y_train, test_size = 0.2,

stratify = y_train, random_state = 26)

scaled_train.shape, scaled_val.shape, y_train.shape, y_val.shape

((48000, 28, 28, 1), (12000, 28, 28, 1), (48000,), (12000,))

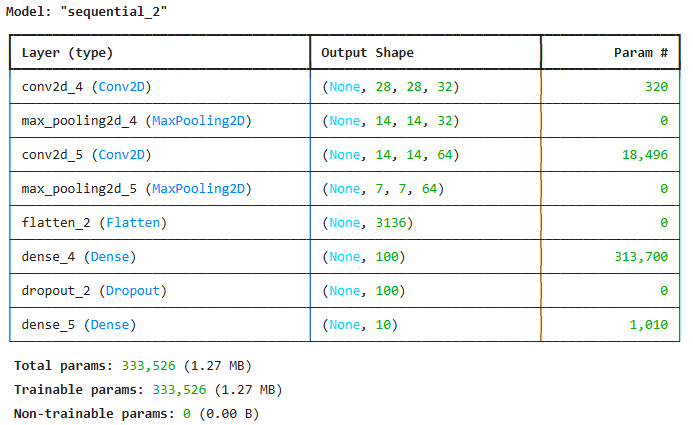

합성곱 신경망 설정

model = keras.Sequential()

model.add(keras.Input(shape = (28, 28, 1)))

# 은닉층은 마음대로 설정 START

model.add(keras.layers.Conv2D(32, kernel_size = 3, activation = "relu", padding = "same")) # padding을 넣어줘야 사이즈가 쪼그라들지 않음

model.add(keras.layers.MaxPool2D(2))

model.add(keras.layers.Conv2D(64, kernel_size = 3, activation = "relu", padding = "same"))

model.add(keras.layers.MaxPool2D(2))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(100, activation = "relu"))

model.add(keras.layers.Dropout(0.4))

# 은닉층 END

model.add(keras.layers.Dense(10, activation = "softmax"))

model.compile(loss = "sparse_categorical_crossentropy", optimizer = "adam", metrics = ["accuracy"])

model.summary()

es_cb = keras.callbacks.EarlyStopping(patience = 4, restore_best_weights = True)

history = model.fit(scaled_train, y_train, validation_data = (scaled_val, y_val), epochs = 40,

callbacks = [es_cb], batch_size = 32)

plt.figure()

plt.plot(history.history["loss"], label = "train_loss")

plt.plot(history.history["val_loss"], label = "val_loss")

plt.plot(history.history["accuracy"], label = "train_acc")

plt.plot(history.history["val_accuracy"], label = "val_acc")

plt.xlabel("epoch")

plt.legend()

plt.show()

model.evaluate(scaled_test, y_test)

[0.020437179133296013, 0.9934999942779541]

preds = model.predict(scaled_test)

preds.shape

(10000, 10)

pred_arr = np.argmax(preds, axis = 1)

pred_arr != y_test

array([False, False, False, ..., False, False, False])

x_match = []

for idx, item in enumerate(pred_arr):

if item != y_test[idx]:

x_match.append(idx)

fig, axs = plt.subplots(1, 10, figsize = (15, 15))

for i, item in enumerate(x_match[:10]):

axs[i].imshow(x_test[item], cmap = "gray_r")

axs[i].axis("off")

plt.show()

pred_arr[x_match[:10]]

array([4, 6, 3, 9, 9, 5, 1, 2, 6, 2], dtype=int64)

y_test[x_match[:10]]

array([9, 8, 5, 4, 8, 6, 8, 7, 4, 7], dtype=uint8)728x90

'09_DL(Deep_Learning)' 카테고리의 다른 글

| 13_순환 신경망(RNN) (1) | 2025.04.28 |

|---|---|

| 12_CIFAR10 (0) | 2025.04.28 |

| 10_합성곱 신경망_시각화(부츠) (1) | 2025.04.25 |

| 09_합성곱 신경망(컬러 이미지 분류) (0) | 2025.04.24 |

| 08_합성곱 신경망_구성요소 (0) | 2025.04.24 |